Apple’s recent decision to refuse the FBI access to the encrypted iPhone of terror-suspect Syed Farook is the latest controversy in a long-running choice between two of our greatest (or at least most media-covered) modern evils—Terrorism and Privacy Invasion.

Last December, married couple Syed Farook and Tashfeen Malik opened fire at a Department of Public Health holiday party in San Bernardino, California. According to latimes.com, the couple killed 14 people, before dying in a shootout with local law-enforcement.

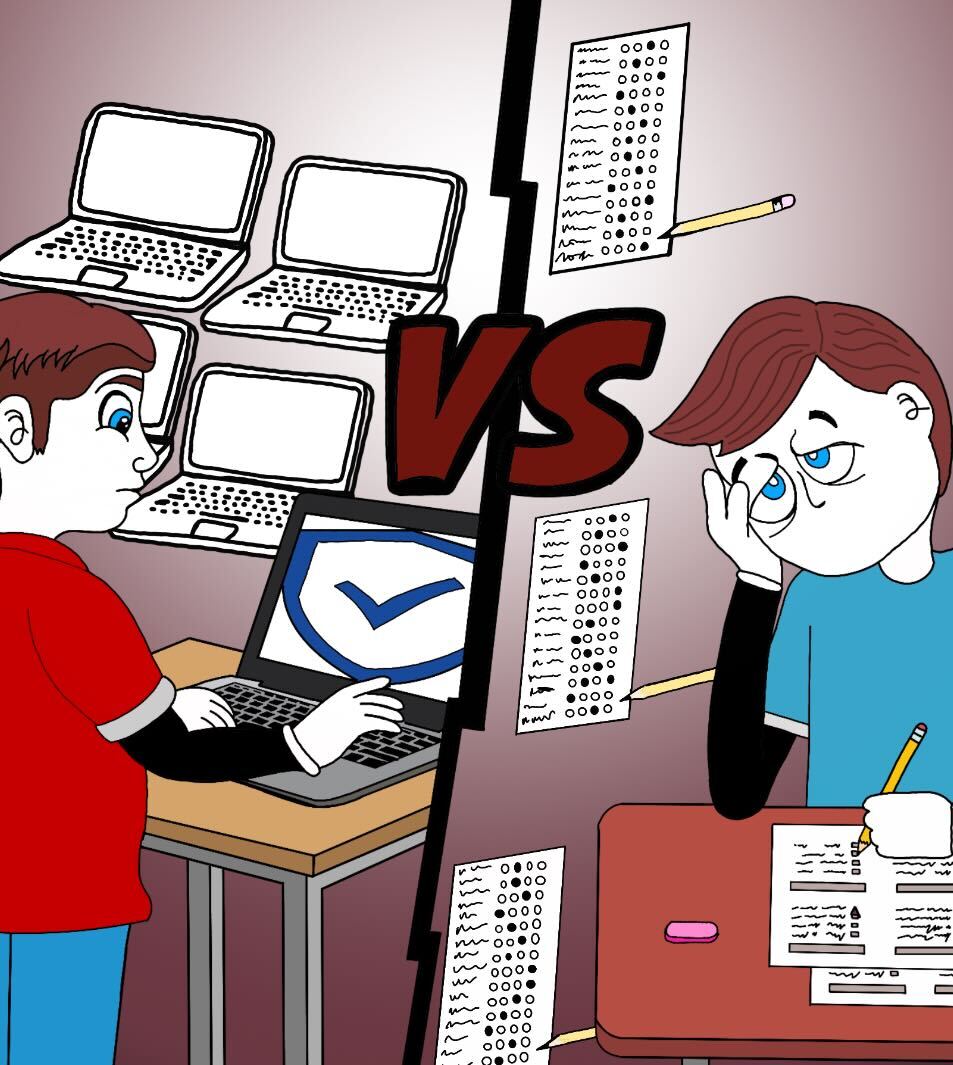

The FBI then opened a counter-terrorism investigation that now centers around Farook’s iPhone 5c. The phone has a passcode lock set to delete all phone data in the event of 10 incorrect passcode attempts and is encrypted. According to usatoday.com, this means the data on the phone is encoded against those without the correct passcode—all information on the phone appears scrambled to anyone trying to hack in.

The FBI investigation started several days after the passage of the U.S.A. Freedom Act, which ended the NSA’s ability to store phone-records of random U.S. citizens from the past five years. The FBI was then forced to go directly to Farook’s service provider, which can legally store two years’ worth of customer cellular data. This information can be looked at only with a warrant.

Unsatisfied with this information, the FBI has demanded that Apple create software to override the iPhone’s encryption passcode. Despite a California court order, Apple has refused. On their website, apple.com, they have released a customer letter addressing the issue.

“The implications of the government’s demands are chilling,” the site reads. “If the government can use the All Writs Act to make it easier to unlock your iPhone, it would have the power to reach into anyone’s device to capture their data. The government could extend this breach of privacy and demand that Apple build surveillance software to intercept your messages, access your health records or financial data, track your location, or even access your phone’s microphone or camera without your knowledge.”

While this seems like a large leap from the FBI’s request for Apple to unlock a singular terror-suspect’s iPhones, it doesn’t seem implausible—it does, however, seem like a possibility that needs to be nipped in the regulatory bud as soon as possible.

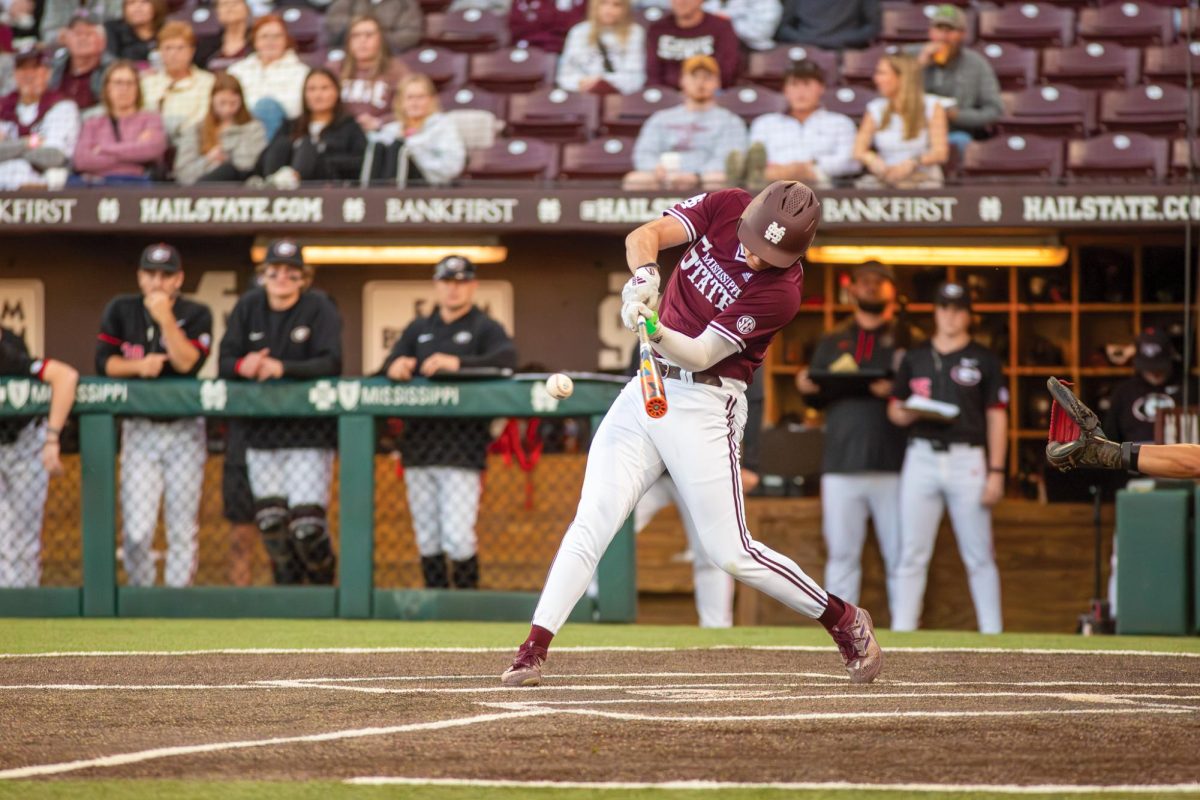

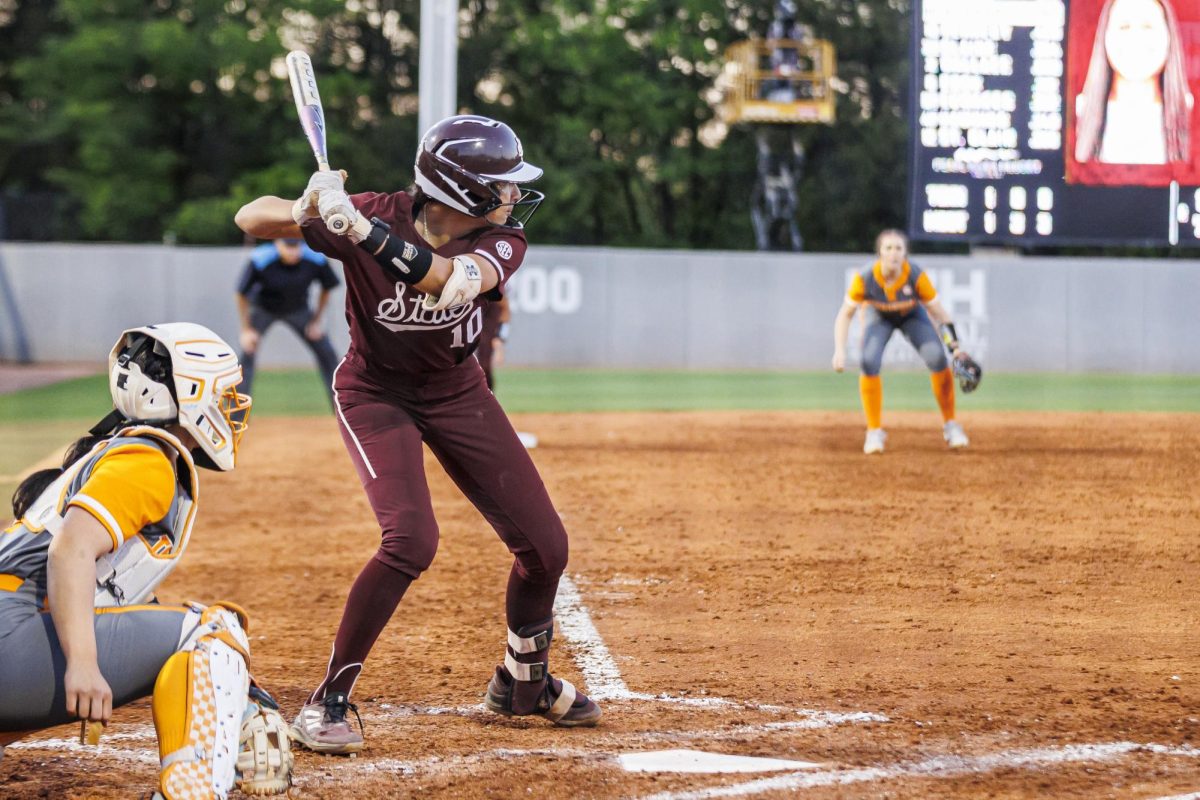

As someone whose capacity to remember things finished developing right around Sept. 11, 2001, I have grown-up with an ever-expanding lists of places I should be paranoid about terrorist attacks: New York, school, the movies, sporting events and even Taylor Swift’s hallowed 1989 Concert Tour, where a conversation my friends and I had about potential bioterrorism-via-dry-ice really put a damper on the mood.

As someone who got her first cell-phone as a late-aughts (I don’t know what this means?) middle schooler, I went from tween-to-teen with an ever-expanding list of reasons I should be paranoid about someone going through my phone: texts to/from/about crushes, usage of the “b-word” in reference to my/other peoples’ mom and nowadays it’s all about unflattering selfies, screenshots and Google-searches. None of which feel fit for public consumption.

While my phone may be embarrassing (think all-of-the-above phone usage about, like, “in-grown pit hair”), it isn’t necessarily incriminating as, odds are, the smartphones of most Americans—like the majority of purulent skin lesions, most phones are somewhat gross, but benign.

It is this harmlessness that leaves me somewhat torn—if most people don’t have much to hide, the potential breach of iPhone encryption doesn’t seem like a huge deal, just a creepy one. However, if most people have nothing terrorism-related to hide, it also seems unnecessary. So unnecessary, that I, an individual whose laptop webcam is covered with tape, almost can’t believe the government would use this instance to parlay into hardcore surveillance of the average citizen.

But then I think of the NSA’s random phone-recording, and Apple’s position makes sense. As much as I fear terrorism, it mainly fills me with an impersonal sense of dread. The general consensus on terrorists is that they are after every one—which means they aren’t after any civilian in particular. As callous as it may be, it’s kind of comforting to think of terrorism as an odds game—because odds are, it isn’t going to affect me personally (at least until I run for president in 2036).

I am far more likely to be a victim of broad government surveillance than I am terrorism, and the privacy invasion always feels personal. My phone is always with me, and documents nearly every event in my daily life. If it’s happened to me, I’ve probably texted, posted, or google-searched about it—and the idea that my private thoughts, pictures and misguided attempts at One Direction fanfiction could go public make me want to throw up.

Overall, I think Apple is right to refuse the FBI backdoor access to the encrypted iPhone of Syed Farook—at least until more legal parameters are set concerning how the software can and cannot be used. I want to be safe at school, sports events and Taylor Swift stadium concerts. I don’t, however, want the FBI to be there with me, through my iPhone camera—at this point, it’s too much like my mind’s eye.